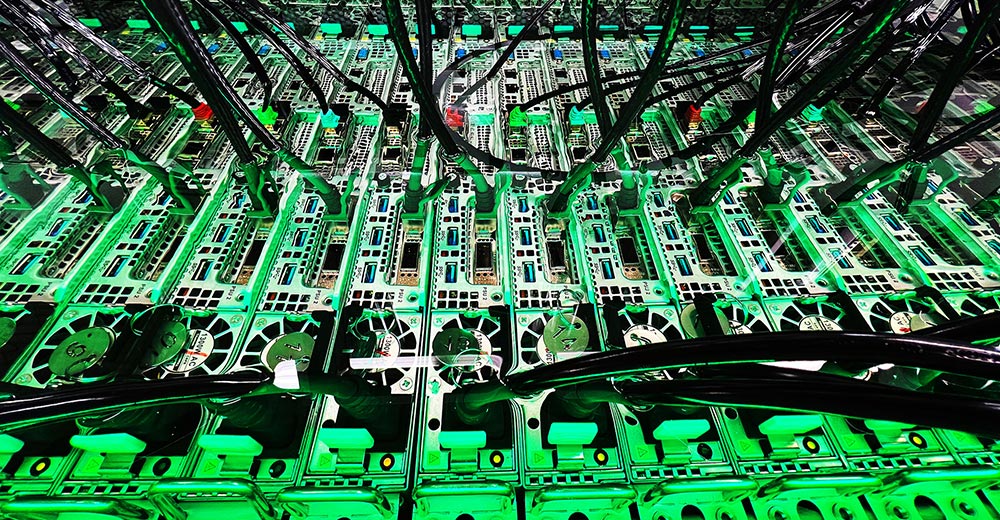

A desire for sustainable data center options and a heat wave caused by increasingly powerful computer chips are driving the adoption of systems that cool computers by immersing them in fluids.

By operating a server rack submerged in a special fluid, heat can be passively removed from the hardware to the fluid, pumped to a heat exchanger, then returned to the computer after the heat is removed.

“The liquids we use are not toxic, they’re biodegradable, they don’t evaporate, and they’re designed to last for 15 or more years,” explained Gregg Primm, vice president for global marketing at GRC, a provider of immersion cooling technology.

“The fluids are about 1200 times more efficient at removing heat than air is,” he told TechNewsWorld. “Air is actually a bad heat exchange medium.”

“Some fairly small changes need to be made — like removing air fans and modifying heat sinks — to make the systems behave better in liquid, but otherwise, it’s the same as an air-cooled server,” he said.

Although the most commonly used fluids are petroleum-based, he added, fluids based on vegetable oil are starting to appear on the market.

Cooling Hot Chips

Abhijit Sunil, a senior analyst with Forrester Research, noted that liquid cooling is coming into the limelight now because of the energy needs of microchips.

“Modern CPU and GPUs consume tremendous power, and this, in turn, places pressure on data centers to have effective cooling techniques,” he told TechNewsWorld.

“Diverting power to cooling techniques increases the PUE of data centers,” he continued. “Thus, more effective cooling techniques are important. This is especially true in data centers built to handle specialized workloads such as AI/ML.”

Power usage effectiveness (PUE) is a metric used to measure the energy efficiency of a data center. PUE is determined by dividing the total amount of power entering a data center by the power used to run the IT equipment within it.

“The power being consumed by GPUs and CPUs now is growing very, very fast,” added Primm. “Typical systems are 400 watts. Some systems are starting to come out at 700 watts.”

“These things are consuming a huge amount of power and, as a result, producing a huge amount of heat,” he explained. “We’re hitting the point where air can’t cool these chips anymore. You can’t make the air cold enough and move it fast enough to cool systems generating that much heat. So liquid cooling is the only option.”

“We have heard from most major infrastructure vendors that investing in liquid cooling is a major strategy for them going forward,” added Sunil. “We have also heard from data center operators about the importance of investing in liquid cooling.”

Place Outside Data Center

Some technologists, though, believe immersion cooling can be better used outside the data center.

“Generally, immersion cooling isn’t as effective in a data center as other more targeted cooling technologies like warm water cooling,” observed Rob Enderle, president and principal analyst at the Enderle Group, an advisory services firm in Bend, Ore.

“However, for distributed servers outside of a data center, it is better because it protects the server from physical interference and environmental issues, and it is particularly ideal in very harsh environments,” he told TechNewsWorld.

“Data centers are typically isolated and are equipped for cooling servers, so moving to immersion is generally overkill,” he said. “It is where you don’t have a data center that this technology truly shines.”

Gartner analysts Jeffrey Hewitt and Philip Dawson came to a similar conclusion about immersion cooling in the technology and advisory company’s “Hype Cycle for Edge Computing” report for 2022.

“Immersion-cooled systems are smaller, quieter, and more efficient than traditional rack systems,” wrote Hewitt and Dawson. “Their initial value will most likely come from outside the data center, where they enable higher compute density at higher energy efficiency and lower noise.”

“Although the capital cost of the system is typically higher because of the mechanical and cooling infrastructure involved, there are environments where these systems outperform any alternative,” they added.

Maintenance Challenges

Hewlett and Dawson also noted that immersion cooling enables edge servers to operate in hostile locations.

Medium-scale edge computing nodes or wireless telecom nodes often operate under the thermal, spatial, and power constraints of a remote server bunker, pole, or closet, they explained. These space and power efficiencies also benefit shipboard or truck-based mobile data centers.

They added that these systems represent a practical on-premises solution for certain GPU-centric small-scale supercomputing tasks. Isolation of the components also facilitates their use in locations with high levels of particulate pollutants, like dust.

Despite their benefits, immersion cooling systems can be challenging to maintain. “You need to train the people servicing the computers, build drains for coolant leakage, and provide special protective gear so the workers can work with the coolant,” Enderle said.

He added that the coolant is non-conductive, so the typical concerns over electrical gear and water don’t apply. “However,” he continued, “you must avoid contaminating the coolant or creating a significant leak.”

Enderle also noted that the heat exchangers for the coolant might need to be placed outside the building housing the system or increase the HVAC capacity inside the building to handle the extra heat. Otherwise, the facility may become uncomfortably warm.

Institutional Resistance

Primm pointed out that immersion cooling systems may also face institutional challenges. “Data center operators and designers are very change-averse,” he maintained. “To most people, this is a new way to doing something that they’re used to doing in another way.”

“What data center operators and designers are truly expert at is air handling,” he continued. “They’ve had to develop tremendous skill in cooling air, moving it past hot components and out of the data center to ensure data center availability and reliability. Totally changing that infrastructure to something that eliminates that creates a fear-of-the-new type of thing.”

“One of the biggest things is that many data center operators have a tremendous investment in their air-cooled infrastructure, and they want to get the most value out of that for as long as they can,” he added.

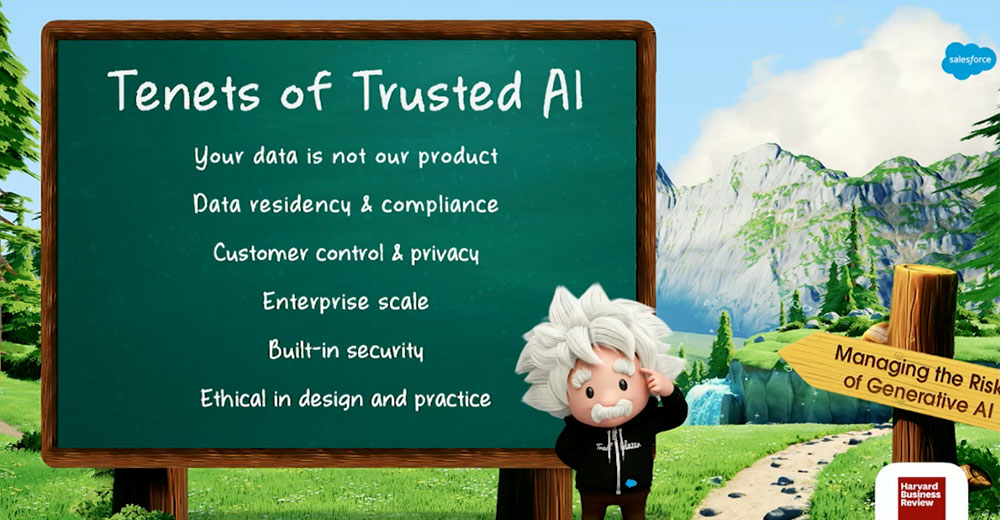

According to Forrester, IT can be a significant source of carbon emissions. While 2% to 4% of global energy use might not seem like a lot compared to other sources of energy demand, it can be a notable amount depending on the country and industry, it noted. For example, data center energy use accounts for 14% of Ireland’s electricity use.

“Sustainability is becoming a big decider for people,” Primm said. “They want to reduce their carbon footprint, and one of the fastest ways to do that in the data center is to eliminate air cooling as much as you can.”